Building Serverless .NET Data APIs with Docker on the Google Cloud Platform

In this short guide, I’m going to show you how I built a few Data APIs for querying data from a BigQuery dataset. These APIs will be deployed onto a docker container using a GCP serverless service called Cloud Run.

Architecture

The reason for producing this guide was to show how easy it is to work with Serverless components. First, I’ll list the services I’ve used and how they fit in to the overall solution.

Cloud Run: Cloud Run is a fully managed compute platform that automatically scales your stateless containers. In this solution Cloud Run will handle the API requests and as the service is fully managed we don’t have to think about scaling. [Cloud Run documentation]

Cloud Build: Cloud Build is a service that executes your builds on Google Cloud Platform infrastructure. Cloud Build can import source code from Google Cloud Storage, Cloud Source Repositories, GitHub, or Bitbucket, execute a build to your specifications, and produce artifacts such as Docker containers or Java archives. Cloud Build executes your build as a series of build steps, where each build step is run in a Docker container. A build step can do anything that can be done from a container irrespective of the environment. When our .net core source code (or any other code we choose to use) is ready we will use Cloud Build to build our Docker container and then host it on our GCP container registry. [Cloud Build documentation]

Container Registry: Container Registry is a single place for your team to manage Docker images, perform vulnerability analysis, and decide who can access what with fine-grained access control. Existing CI/CD integrations let you set up fully automated Docker pipelines to get fast feedback. In our solution we will use Container Registry to host our Docker image containing our .net core Web Api. [Container Registry documentation]

BigQuery: BigQuery is a Serverless, NoOps, highly scalable, and cost-effective cloud data warehouse designed for business agility. As it is NoOps we do not need to consider the infrastructure or the services of a DBA. We will be using BigQuery to get access to a public dataset containing the data we wish to expose through our API. [BigQuery documentation]

Cloud Shell: Cloud Shell provides you with command-line access to your cloud resources directly from your browser. We will not be using Cloud Shell as a development environment in this solution but we will be using it to run our commands. [Cloud Shell documentation]

Accessing the data

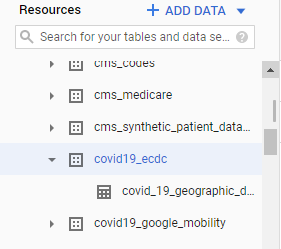

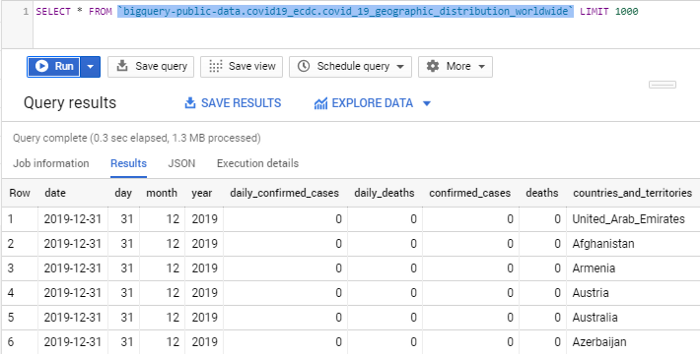

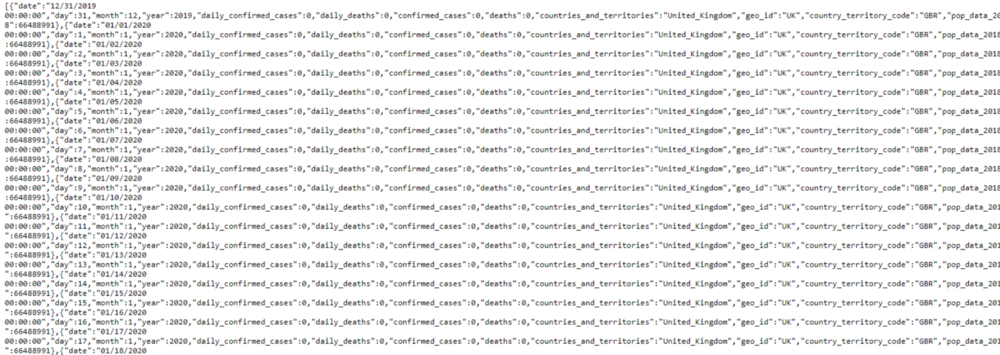

As Covid 19 seems to be on everyone’s mind at the moment, I will be using a BigQuery public dataset called covid19_ecdc. This dataset contains the data relating to Covid 19 across the globe including confirmed cases, deaths by country and deaths by date etc.

Data

If we look at this in BiqQuery we can identify the schema for the data that we will need to build our .net class from. For this solution our API will extract

- All the data

- All the data for a specific country

- All the data for all countries for a specific day.

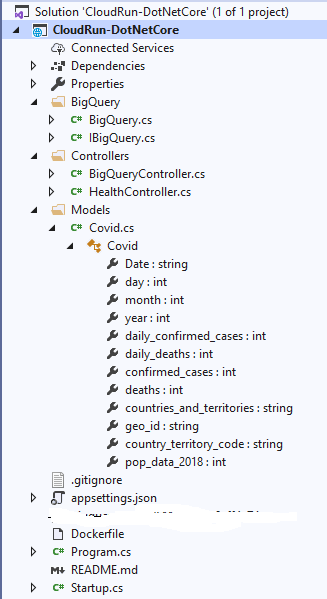

.Net Project

Building a REST API with .net core is a fairly straight forward task. The aim with this solution is not to demonstrate the use of .net core but to show how we can use GCP to run our code in a Serverless fashion. I apologise in advance if my .net code isn’t the best. I developed the code using Visual Studio 2019 and .net core 3.1. To make things easier I have published my code to a GitHub repository. Github repository

We are now going to look at the Visual Studio solution and highlight some of the code and what it does.

The lines of code that need to be modified to suit your own environment are in the BigQuery.cs file. You will need to include your GCP Project ID and the name of your GCP credentials json file(this must be placed in the project root folder).

Const string PROJECT_ID = "YOUR PROJECT ID";

public BigQueryClient GetBigqueryClient()

{

string jsonpath = "NAME OF YOUR CREDENTIAL JSON FILE";

var credential = GoogleCredential.FromFile(jsonpath);

using (var jsonStream = new FileStream(jsonpath, FileMode.Open, FileAccess.Read, FileShare.Read))

credential = GoogleCredential.FromStream(jsonStream);

return BigQueryClient.Create(PROJECT_ID, credential);

}

Dockerfile

A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. This project uses a standard microsoft aspnet core 3.1 image, copies the published code to a folder in the image and then uses the ENTRYPOINT to run the .net code on container start.

ENV ASPNETCORE_URLS=http://*:${PORT}

COPY bin/Debug/netcoreapp3.1/publish/ App/

WORKDIR /App

ENTRYPOINT ["dotnet", "CloudRun-DotNetCore.dll"]

Requests

To get the data we specified we need to send 3 different queries off to BigQuery to process. I have coded a standard get_data() method for all 3 but pass in different parameters to build up the SQL dynamically. To get all the countries our BigQuery SQL syntax is

SELECT * FROM `bigquery-public-data.covid19_ecdc.covid_19_geographic_distribution_worldwide`

To get data from all countries for a specific date our query would be something like this. (substituting the date with the date required)

SELECT * FROM `bigquery-public-data.covid19_ecdc.covid_19_geographic_distribution_worldwide`

WHERE date = '2019–12–31'

Finally to get all the data for a specific country our query would look something like this. (substitute the geo_id with the one you require).

SELECT * FROM `bigquery-public-data.covid19_ecdc.covid_19_geographic_distribution_worldwide` WHERE geo_id = 'UK'

There are many other queries we could of coded but for the purposes of this demo I wanted to keep it short and simple.

Preparing the Source Code

Before we start we need to get our source code ready. Once you have downloaded the code you will need to create a service account in the GCP project you will be using and download the json file to the root of the visual studio project. You can find details of how to do this here. You then need to open the solution and open the BigQuery.cs file and change the following code:

const string PROJECT_ID = "YOUR PROJECT ID";

Paste in your GCP Project id where the code says “YOUR PROJECT ID”.

string jsonpath = “NAME OF YOUR CREDENTIAL JSON FILE”;

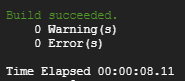

Replace “NAME OF YOUR CREDENTIAL JSON FILE” with the name of the actual json file you downloaded from GCP and placed in the root folder. Once you have made these 2 minor modifications you can compile your source code. Make sure you publish the code so that the files referenced in the Dockerfile exist. Once it compiles cleanly you can move on.

Build a Docker image with Cloud Build

In future articles I will use Cloud Build to automate builds and deployments to Cloud Run using Continuous deployment from my git repository. For now, we are going to do everything from the cloud shell. Firstly, we need to get our code into our Cloud Shell environment. I simply zip up my code, upload it into cloud shell and then unzip it. At this point, if you want to verify the code is still working you can cd into the CloudRun-DotNetCore directory and execute the following command:

dotnet build

The output should look like this:-

Now we are ready to build the docker image. Every time we make a change to the code we need to build a new version of the docker image. There are many ways of doing this but as I stated earlier I’m going to keep it simple. We are going to use the Cloud Build tool to build the image and publish it to the Container Registry. Before you run this command make sure the .gitignore file is not present in the folder, if it is remove it and make sure you are at the same folder level as the Dockerfile. The command to build the docker image is:

gcloud builds submit --tag gcr.io/YOUR_PROJECT_ID/DOCKER_IMAGE_NAME

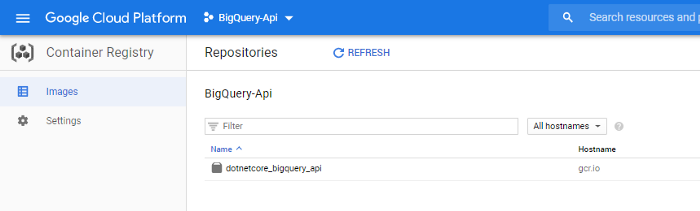

You may get some prompts regarding enabling of services, you can just select ‘yes’ to them all. If everything goes well you should see your Docker image published in the Container Registry.

Deploy docker image using Cloud Run

The final step in our solution build is to deploy our docker image on the Web. Cloud Run will do everything for us here. All we have to do is choose a SERVICENAME, which will form part of the exposed URL, and update the reference to our docker image.

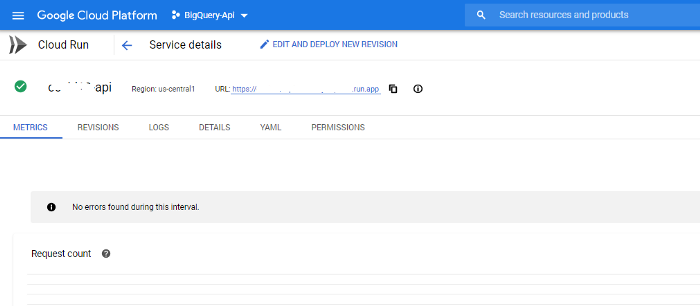

gcloud run deploy SERVICENAME --image gcr.io/YOUR_PROJECT_ID/DOCKER_IMAGE_NAME --platform managed --region us-central1 --allow-unauthenticated

Finally we have a public URL that we can use to start fetching the data. I have masked mine out here as it won’t be available by the time you are reading this.

Test the API

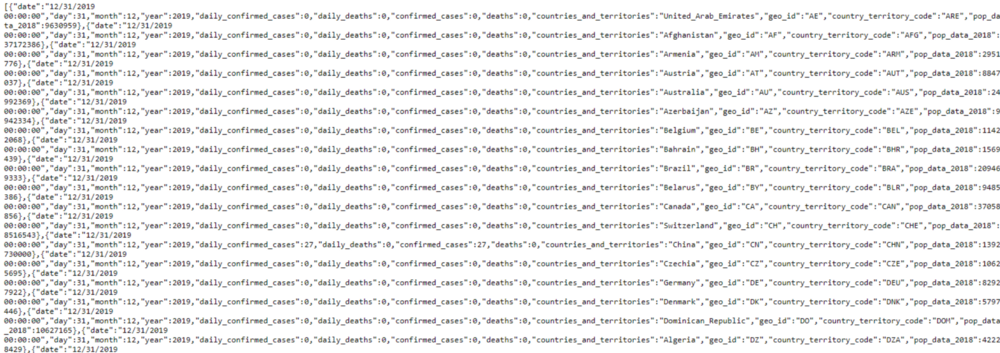

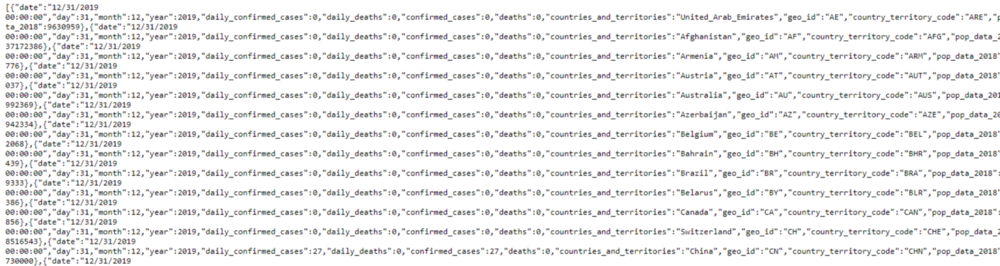

Let’r try and get the data through our browser. The

Data from all countries

<base url>/country

Data for a specific date

<base url>/country/day/2019-12-31

Data for a specific country

<base url>/country/code/UK

Conclusion

This guide has shown you how simple it is to develop a Data API with .net core and the GCP Serverless products such as Cloud Run and BigQuery. We could use this data to build dashboards using Data Studio or other such products or even put a Blazor front end onto it. I may do this in a future article. I may also provide an update to this article that uses Continuous deployment from git using Cloud Build. I will also write an article that shows how we could use Terraform to deploy the container to Cloud Run. If you have any questions you can reach out to me on LinkedIn